Enhancing Data Quality Management with Anomalo’s Machine Learning

January 15, 2022

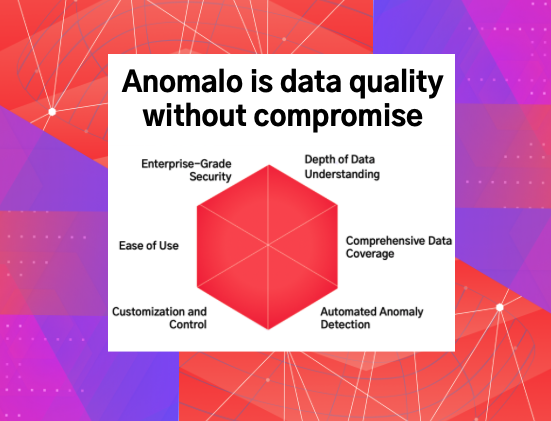

Data quality control is a requirement for being able to trust the various reports and machine learning models that are relying on the information that you curate. Rules based systems are useful for validating known requirements, but with the scale and complexity of data in modern organizations it is impractical, and often impossible, to manually create rules for all potential errors. The team at Anomalo are building a machine learning powered platform for identifying and alerting on anomalous and invalid changes in your data so that you aren’t flying blind. In this episode founders Elliot Shmukler and Jeremy Stanley explain how they have architected the system to work with your data warehouse and let you know about the critical issues hiding in your data without overwhelming you with alerts.

Categories

- Product Updates

Ready to Trust Your Data? Let’s Get Started

Meet with our team to see how Anomalo transforms data quality from a challenge into a competitive edge.